A prompt chain is a series of prompts that connect together to allow you to do more complex tasks than you would achieve with any single prompt. In a prompt chain, the result of one prompt usually becomes part of the next prompt, thus forming a chain of prompts.

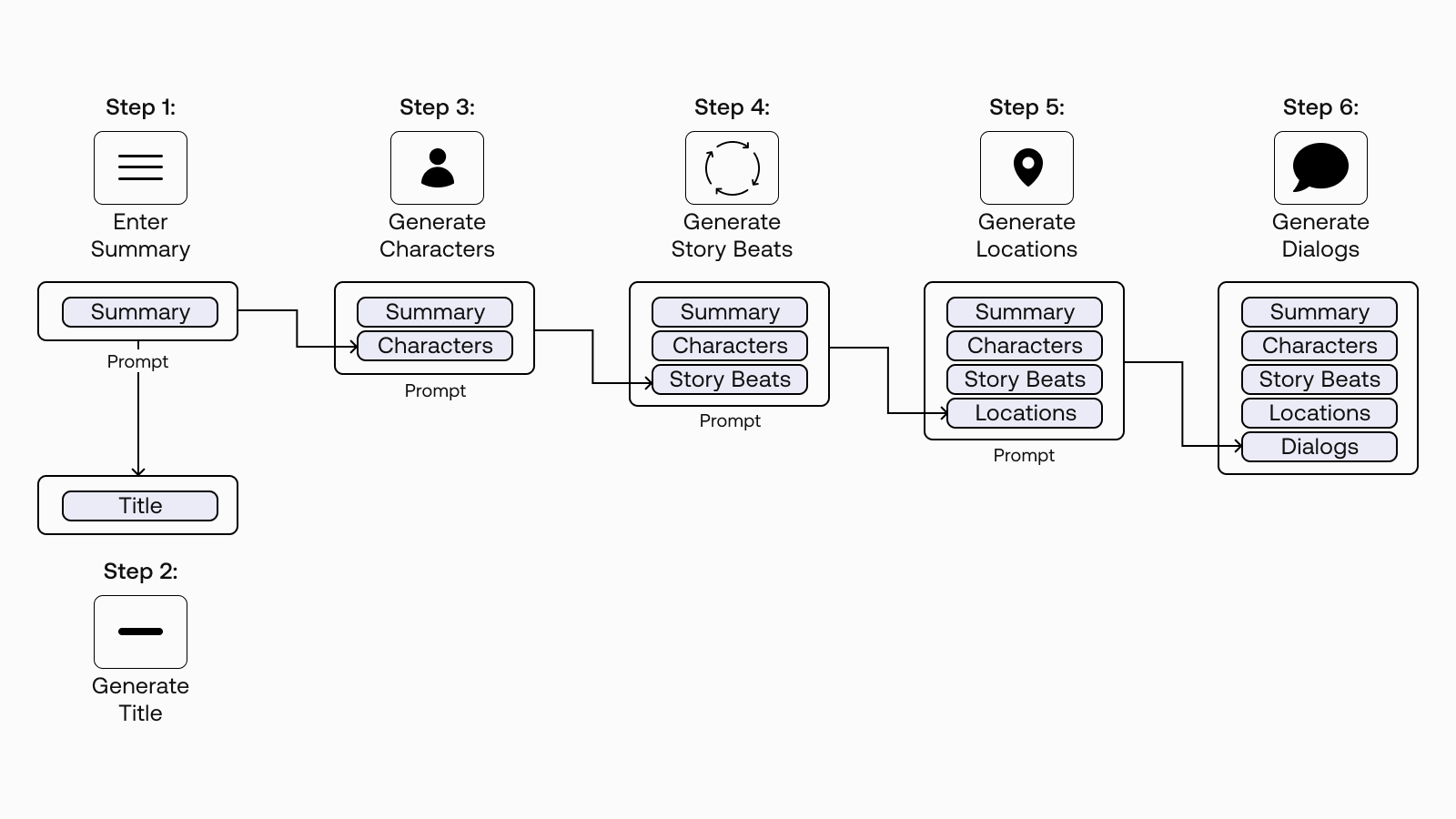

For example, let’s say you want to write a screenplay. Instead of asking ChatGPT to write the whole script with a single prompt, you could break the process down into discrete steps. First, you give ChatGPT a short summary of the play and ask it for ideas for great titles. In the next prompt you include the summary and the title as context and ask ChatGPT to come up with your characters. After that, you use the summary, title, and character descriptions to ask for the story’s main beats. Then, you could ask chatGPT to determine the locations based on those beats and characters. Finally, you use all this information to generate the play’s dialogue in the last step.

This example came from a post on Cohere’s blog and is based on a research paper about using large language models to co-write screenplays and theatre scripts. I will link to both at the end of this post incase you want to see the actual prompts for each step.

The point here is that each prompt builds on the previous prompt. All we provided was a brief outline of what the play should be about and by the fifth prompt we had our locations, characters fleshed out, a structure and actual dialogue.

Prompt chaining used to be a useful technique because up until recently language models had severe context length limitations. If you wanted to write a much longer play, you can take this even further and chain together prompts for the dialogue for each individual scene separately.

Another advantage of prompt chaining is that it lets you get involved at each step. If you were to ask for everything in a single prompt you wouldn’t be able to review and modify things before moving on to the next step. A prompt chain gives you lots of control over the final result in a complex task.

Even as context windows grow larger, language models still seem to have some limit on the conceptual complexity of what can do in a single prompt. A prompt that with a single focused task will usually outperform more elaborate prompt with multiple asks. Using a series of smaller focused prompt tends to lead to better quality results than when you try and squash everything you want done into a single prompt.

It is not clear where these conceptual boundaries are and how many things you can a language model to do in a single prompt, but it’s similar to what you would expect with a human. Compare asking someone to write a play from a tiny description to giving them a five step process for writing the play with instructions for each step.

If you want to increasingly complex stuff with ChatGPT and you are trying to stuff everything you want done into one giant prompt then you can try breaking the process down into discrete steps and create a focused prompt and then chain everything together to get the result you want.